I’m currently a Ph.D. student at the Gaoling School of Artificial Intelligence, Renmin University of China, advised by Prof. Yankai Lin.

I am also conducting research at Natural Language Processing Lab at Tsinghua University(THUNLP), supervised by Prof. XinCong. My research interest includes Agent and RL Algorithm.

🔥 News

- 2025.09: 🎉🎉 MetaFlowLLM is accepted to NeurIPS 2025!

📝 Publications

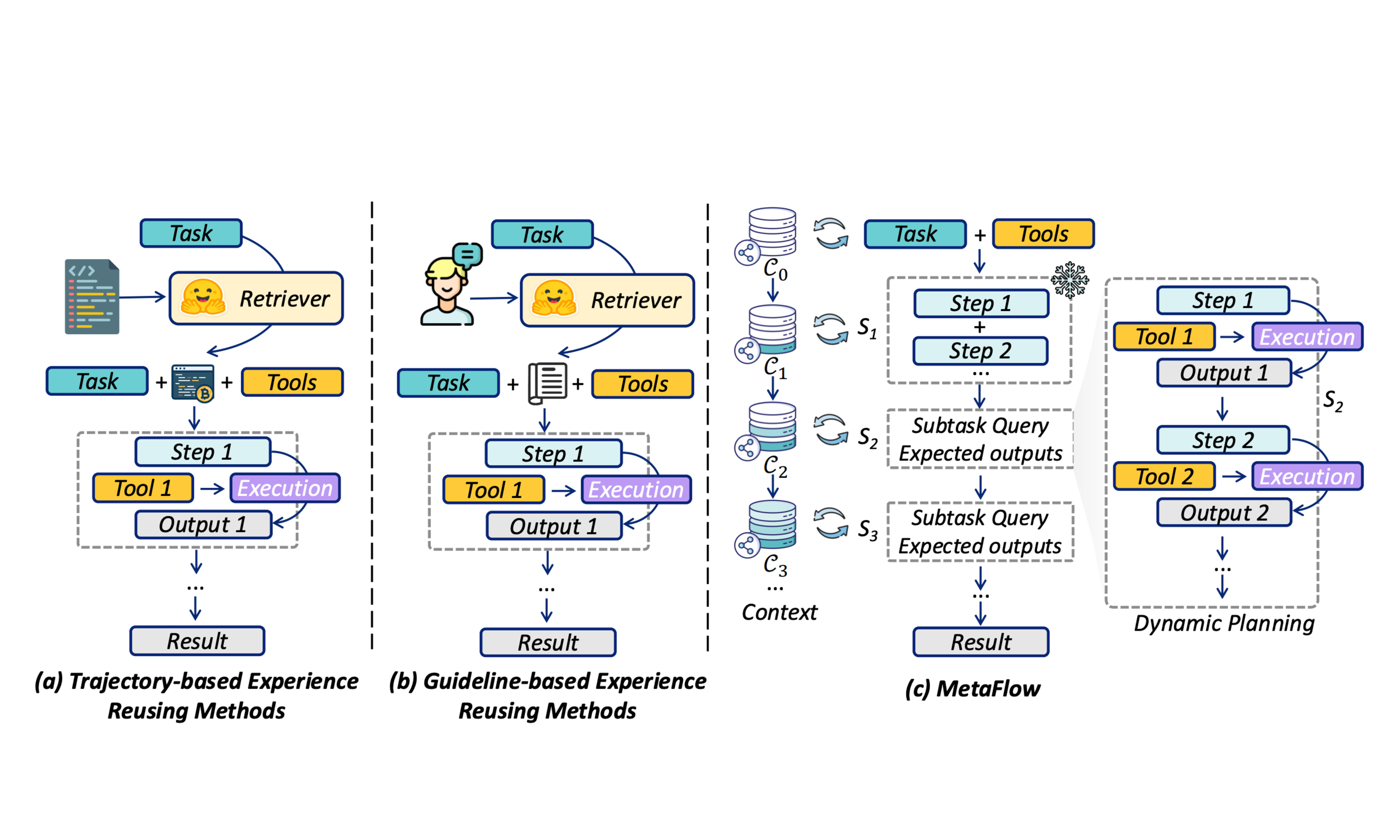

Generalizing Experience for Language Agents with Hierarchical MetaFlows

Shengda Fan, Xin Cong, Zhong Zhang, Yuepeng Fu, Yesai Wu, Hao Wang, Xinyu Zhang, Enrui Hu, Yankai Lin

- Proposed the framework MetaFlowLLM, a framework that builds a hierarchical exgitperience tree of previously completed tasks for language-agent workflows; it employs a hierarchical MetaFlow merging algorithm to enable experience reuse, leading to a higher success rate on AppWorld and WorkBench

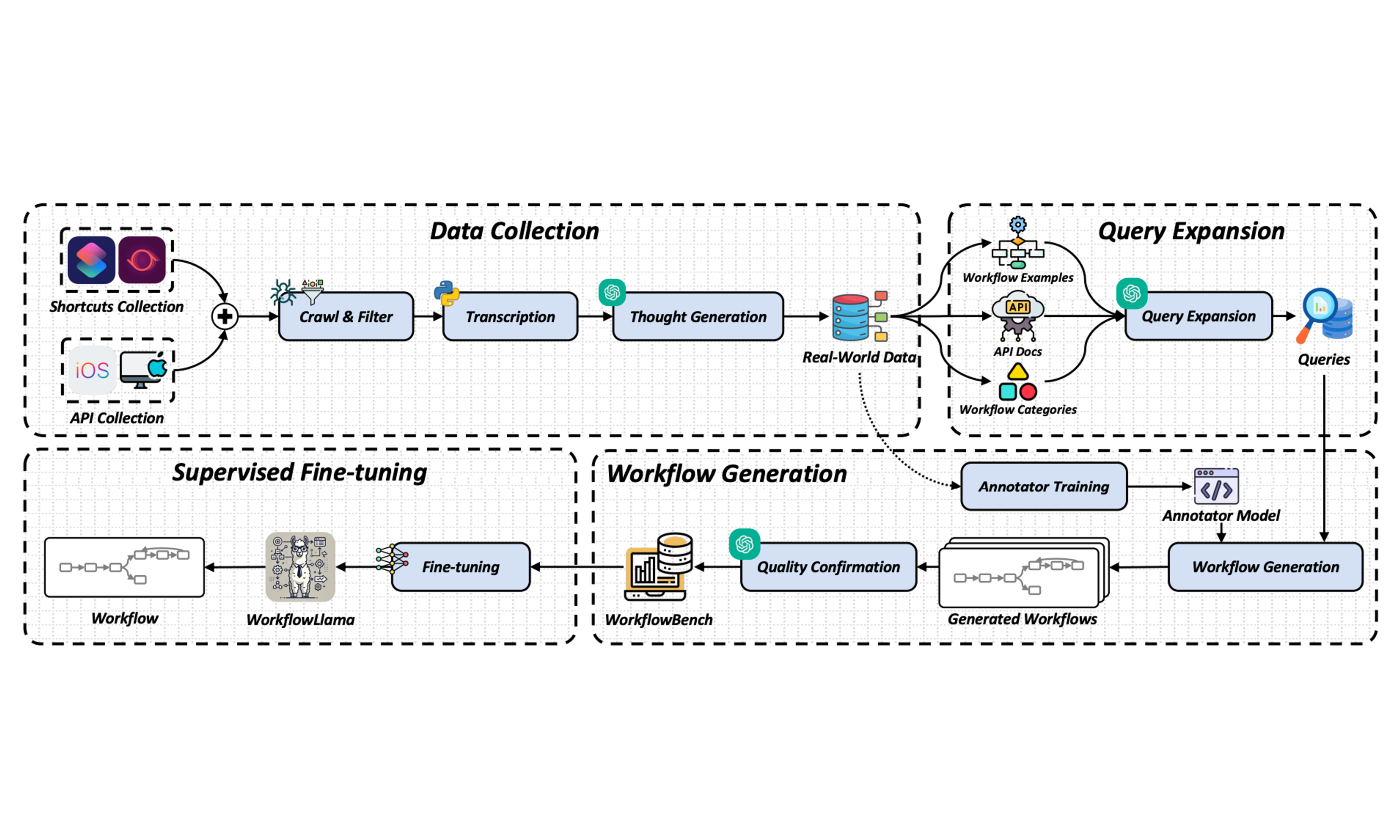

WORKFLOWLLM: Enhancing Workflow Orchestration Capability of Large Language Models

Shengda Fan, Xin Cong, Yuepeng Fu, Zhong Zhang, Shuyan Zhang, Yuanwei Liu, Yesai Wu, Yankai Lin†, Zhiyuan Liu, Maosong Sun

- Introduced WorkflowLLM, a data‑centric framework to improve the orchestration capabilities of large language models (LLMs) by constructing a large dataset (WorkflowBench) and fine‑tuning an LLM to yield strong performance on previously unseen APIs and instructions.

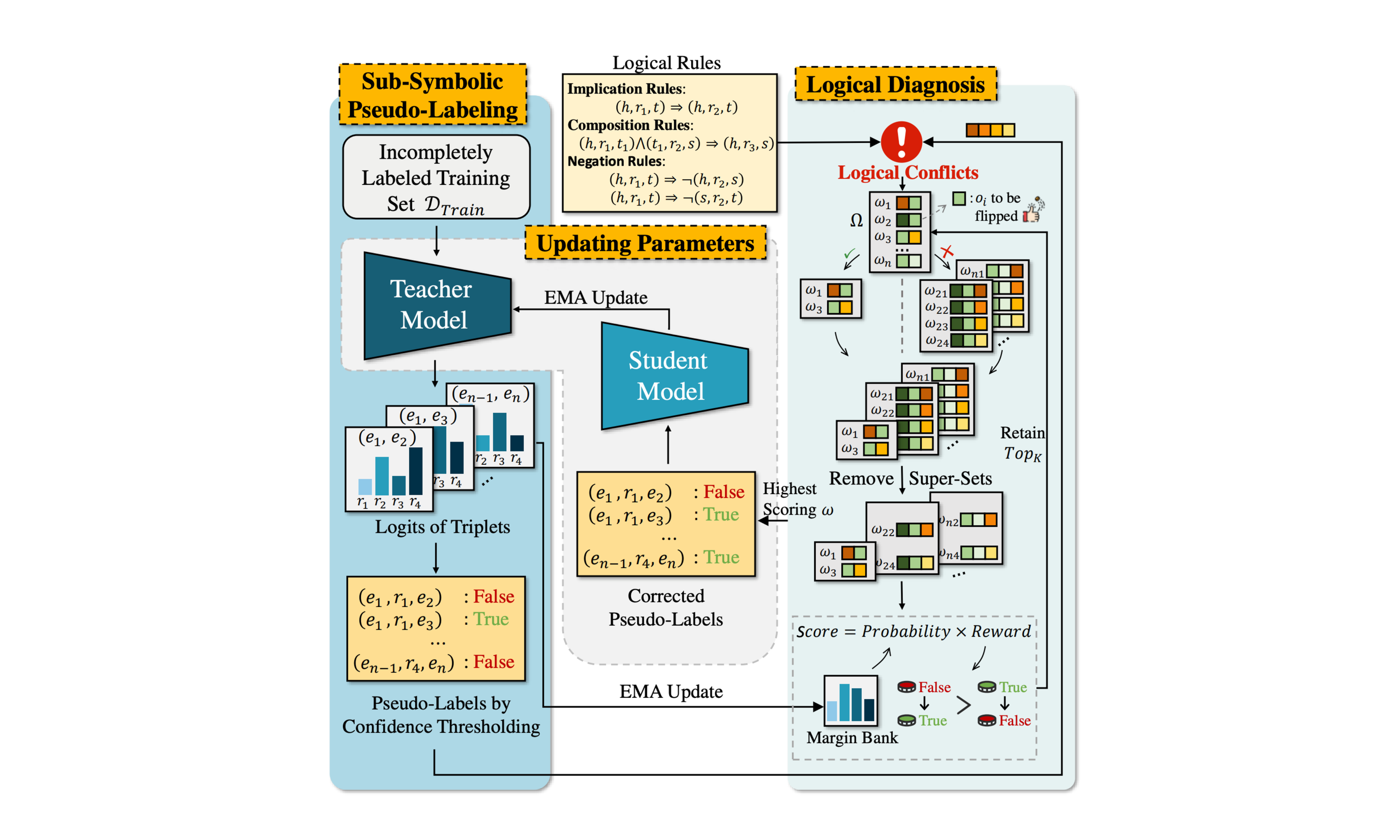

Shengda Fan, Yanting Wang, Shasha Mo, Jianwei Niu

- Introduced LogicST, a neural-logical self-training framework that diagnoses pseudo-label conflicts through logical rules, improving the performance of document-level relation extraction tasks.

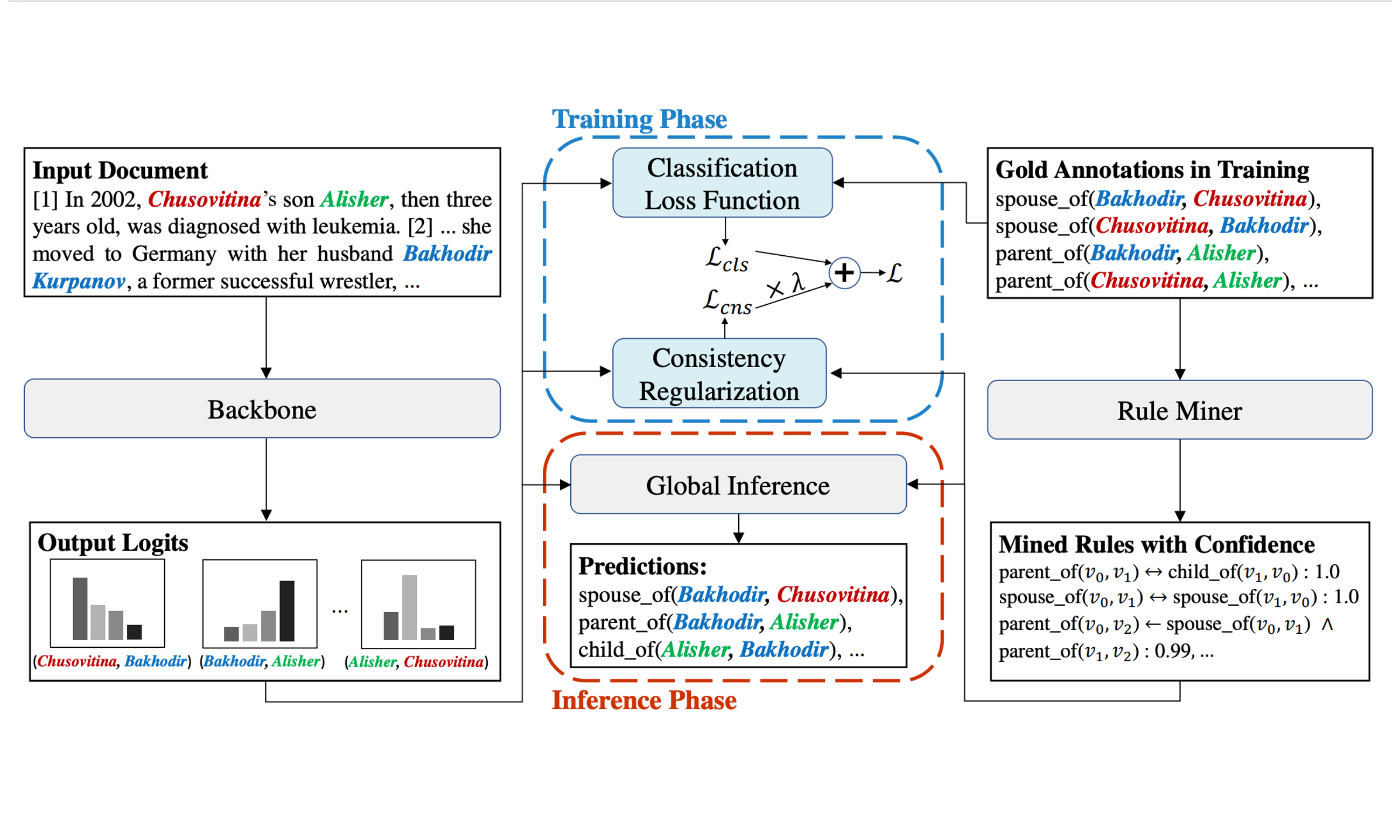

Boosting Document‑Level Relation Extraction by Mining and Injecting Logical Rules

Shengda Fan, Shasha Mo, Jianwei Niu

Code

- Proposed MILR, a framework that mines logical rules from annotations and injects them into the training and inference process to improve consistency and F1 scores in Document Relation Extraction.

-

Key Mention Pairs Guided Document‑Level Relation Extraction, Feng Jiang, Jianwei Niu, Shasha Mo, Shengda Fan, COLING 2022

-

CETA: A Consensus Enhanced Training Approach for Denoising in Distantly Supervised Relation Extraction, Ruri Liu, Shasha Mo, Jianwei Niu, Shengda Fan, COLING 2022

📖 Educations

- 2024.09 - now, PHD student, Renmin University of China, Beijing.

- 2021.09 - 2024.06, Mater, Beihang University, Beijing.

- 2017.09 - 2021.06, Bachelor, Beihang University, Beijing.

💻 Internships

- 2023.09 – 2023.11, Quant Research, E Fund, Guangzhou.

- 2023.04 – 2023.10, Algorithm Engineer, Tiktok Live, ByteDance, Beijing.

- 2020.11 – 2021.01, Enterprise Services, ByteDance, Beijing.